However, the best part of the airport was that the the wifi was free. So, I quickly joined the network and started calling people while waiting for my shuttle to arrive.

Showing posts with label MaxEnt. Show all posts

Showing posts with label MaxEnt. Show all posts

Monday, July 11, 2011

Attending MaxEnt 2011 in Waterloo, Canada

I am attending MaxEnt 2011 in Waterloo Canada.

Travelling to Waterloo was slightly involved because of the few reasons. The first one was the visa issues. At least, I am thankful that it arrived a week before my departure. Few of my friends could not make it due to the delays. Another one was that the airfare to the nearby airport was very very expensive. So, I travelled via Toronto (about an hour drive).

However, the best part of the airport was that the the wifi was free. So, I quickly joined the network and started calling people while waiting for my shuttle to arrive.

However, the best part of the airport was that the the wifi was free. So, I quickly joined the network and started calling people while waiting for my shuttle to arrive.

Here, in MaxEnt 2011, I will be presenting my work on collaborative experimental design by two intelligent agents. The abstract of the talk can be found here ...(PDF!)

The work is the result of the overall successful (past) developments (by the Giants) of the Bayesian method of inference, experimental design techniques and the order-theoretic approach to questions.

We view the intelligent agents as the question asking machines and we want them to be able to design experiments in an automated fashion to achieve the given goal. Here we illustrate how the joint entropy turns out to be the useful quantity when we want the intelligent agents to efficiently learn together.

The details are in paper, which will be put in arxiv soon.

Friday, July 2, 2010

Heading for MaxEnt 2010, Chamonix France

I will be visiting France for a week to attend the MaxEnt 2010 Conference.

There, I will be presenting my work on "Entropy Based Search Algorithm for Experimental Design".

http://maxent2010.inrialpes.fr/program/complete-program/#6.1

I have uploaded some pictures in Facebook. The link is

http://www.facebook.com/

I have uploaded some pictures in Facebook. The link is

http://www.facebook.com/

Friday, June 18, 2010

Diffusive Nested Sampling: Brewer et. al.

Brendon et. al. has a newer version of nested sampling algorithm, they call it Diffusive Nested Sampling (DNS). As the name indicates, it principally differs from the "classic" nested sampling in presenting the hard constraint. It relaxes the hard evolving constraint and lets the samples to explore the mixture distribution of nested probability distributions, each successive distribution occupying e^-1 times the enclosed prior mass of the previously seen distributions. The mixture distribution is weighted at will (a hack :P) which is a clever trick of exploration. This reinforces the idea of "no peaks left behind" for multimodal problems.

On a test problem they claim that DNS "can achieve four times the accuracy of classic Nested Sampling, for the same computational effort; equivalent to a factor of 16 speedup".

PS:

What can grow out of side talks in a conference?

If you know the power of scrapping in the napkin paper, you would not be surprised.

The paper is available in arxiv:

http://arxiv.org/abs/0912.2380

The code is available at: http://lindor.physics.ucsb.edu/DNest/; comes with handy instructions.

---

Thanks are due to Dr. Brewer for indicating typos in the draft and suggestions + allowing to use the figures.

The original nested sampling code is available in the book by sivia and skilling: Data Analysis: A Bayesian Tutorial

Edit: Sep 5, 2013

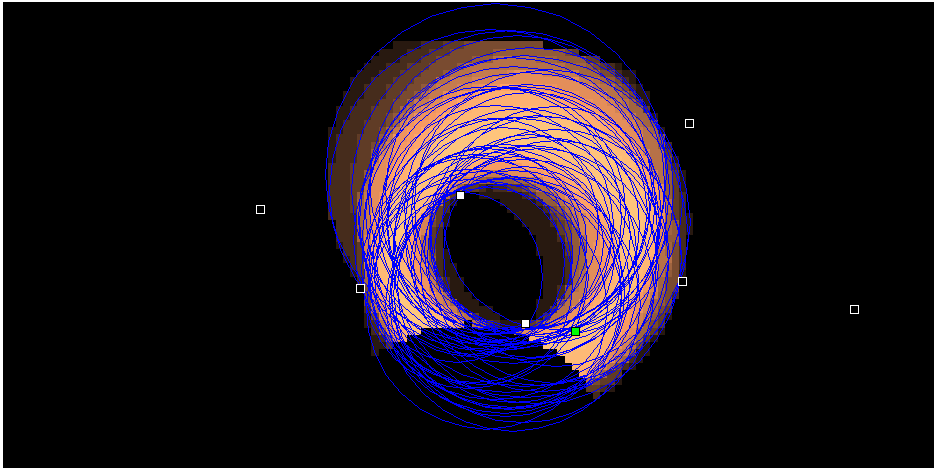

An illustrative animation of Diffusive Nested Sampling (www.github.com/eggplantbren/DNest3) sampling a multimodal posterior distribution. The size of the yellow circle indicates the importance weight. The method can travel between the modes because the target distribution includes the (uniform) prior as a mixture component.

On a test problem they claim that DNS "can achieve four times the accuracy of classic Nested Sampling, for the same computational effort; equivalent to a factor of 16 speedup".

I have not played with it yet. However, it seems worth trying. Just a note to myself.

PS:

What can grow out of side talks in a conference?

If you know the power of scrapping in the napkin paper, you would not be surprised.

The paper is available in arxiv:

http://arxiv.org/abs/0912.2380

The code is available at: http://lindor.physics.ucsb.edu/DNest/; comes with handy instructions.

---

Thanks are due to Dr. Brewer for indicating typos in the draft and suggestions + allowing to use the figures.

The original nested sampling code is available in the book by sivia and skilling: Data Analysis: A Bayesian Tutorial

Monday, May 10, 2010

Nested Sampling Algorithm (John Skilling)

Nested Sampling was developed by John Skilling (http://www.inference.phy.cam.ac.uk/bayesys/box/nested.pdf // http://ba.stat.cmu.edu/journal/2006/vol01/issue04/skilling.pdf).

Nested Sampling is a modified Markov Chain Monte Carlo algorithm which can be used to explore the posterior probability for the given model. The power of Nested Sampling algorithm lies in the fact that it is designed to compute both the mean posterior probability as well as the Evidence. The algorithm is initialized by randomly taking samples from the prior. The algorithm contracts the distribution of samples around high likelihood regions by discarding the sample with the least likelihood, Lworst.

To keep the number of samples constant, another sample is chosen at random and duplicated. This sample is then randomized by taking Markov chain Monte Carlo steps subject to a hard constraint so that its move is accepted only if the new likelihood is greater than the new threshold, L > Lworst. This ensures that the distribution of samples remains uniformly distributed and that new samples have likelihoods greater than the current likelihood threshold. This process is iterated until the convergence. The logarithm of the evidence is given by the area of the sorted log likelihood as a function of prior mass. When the algorithm has converged one can compute the mean parameter values as well as the log evidence.

For a nice description of Nested Sampling, the book by Sivia and Skilling is highly recommended: Data Analysis: A Bayesian Tutorial .

.

The codes in C/python/R with an example of light house problem is available at:

http://www.inference.phy.cam.ac.uk/bayesys/

The paper is available at:

http://www.inference.phy.cam.ac.uk/bayesys/nest.ps.gz

Nested Sampling is a modified Markov Chain Monte Carlo algorithm which can be used to explore the posterior probability for the given model. The power of Nested Sampling algorithm lies in the fact that it is designed to compute both the mean posterior probability as well as the Evidence. The algorithm is initialized by randomly taking samples from the prior. The algorithm contracts the distribution of samples around high likelihood regions by discarding the sample with the least likelihood, Lworst.

To keep the number of samples constant, another sample is chosen at random and duplicated. This sample is then randomized by taking Markov chain Monte Carlo steps subject to a hard constraint so that its move is accepted only if the new likelihood is greater than the new threshold, L > Lworst. This ensures that the distribution of samples remains uniformly distributed and that new samples have likelihoods greater than the current likelihood threshold. This process is iterated until the convergence. The logarithm of the evidence is given by the area of the sorted log likelihood as a function of prior mass. When the algorithm has converged one can compute the mean parameter values as well as the log evidence.

For a nice description of Nested Sampling, the book by Sivia and Skilling is highly recommended: Data Analysis: A Bayesian Tutorial

The codes in C/python/R with an example of light house problem is available at:

http://www.inference.phy.cam.ac.uk/bayesys/

The paper is available at:

http://www.inference.phy.cam.ac.uk/bayesys/nest.ps.gz